A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

GPU parallel computing for machine learning in Python: how to build a parallel computer: Takefuji, Yoshiyasu: 9781521524909: Amazon.com: Books

If I'm building a deep learning neural network with a lot of computing power to learn, do I need more memory, CPU or GPU? - Quora

GPU parallel computing for machine learning in Python: how to build a parallel computer (English Edition) eBook : Takefuji, Yoshiyasu: Amazon.es: Tienda Kindle

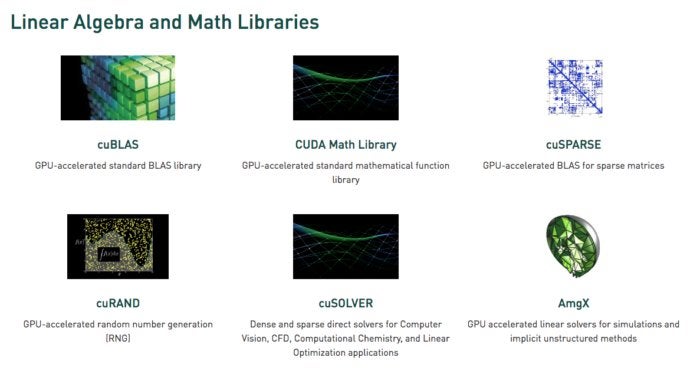

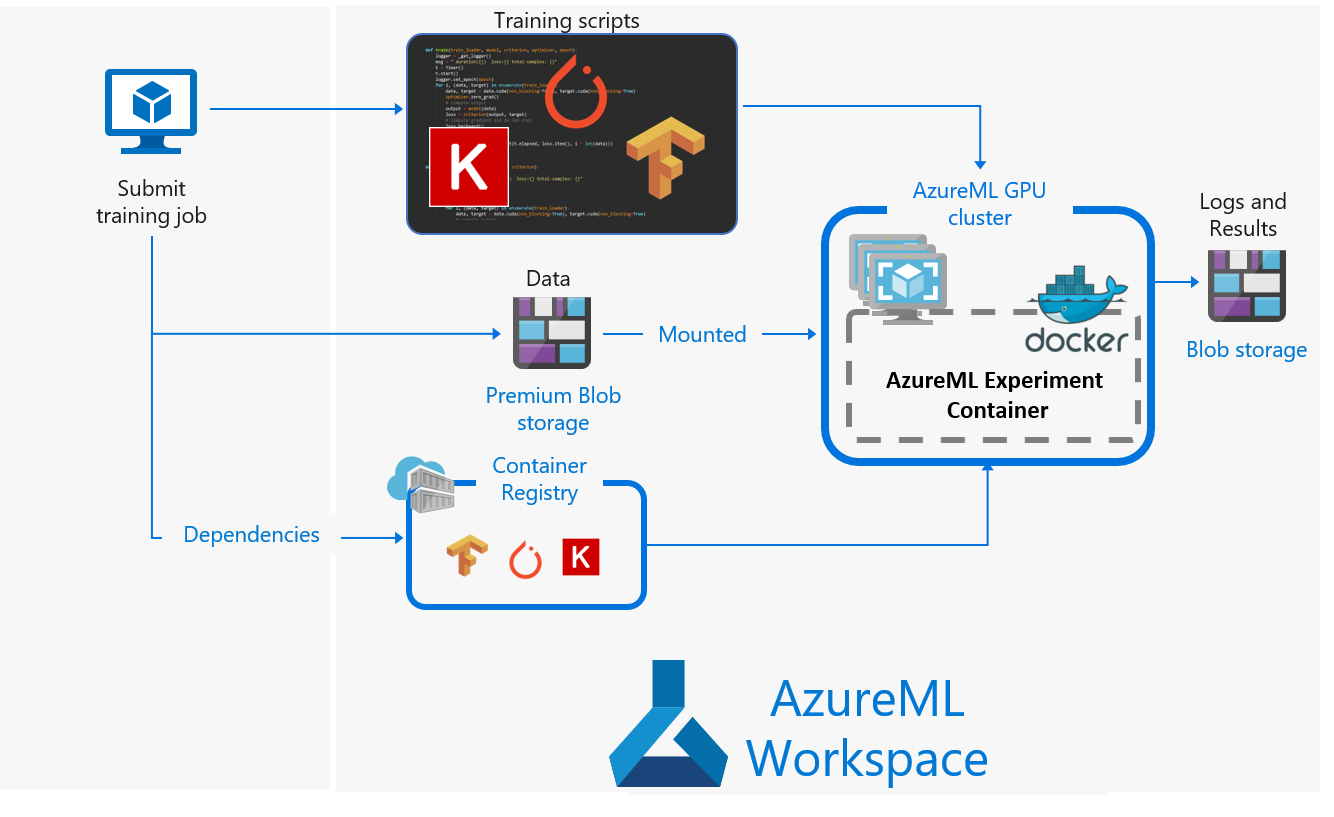

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

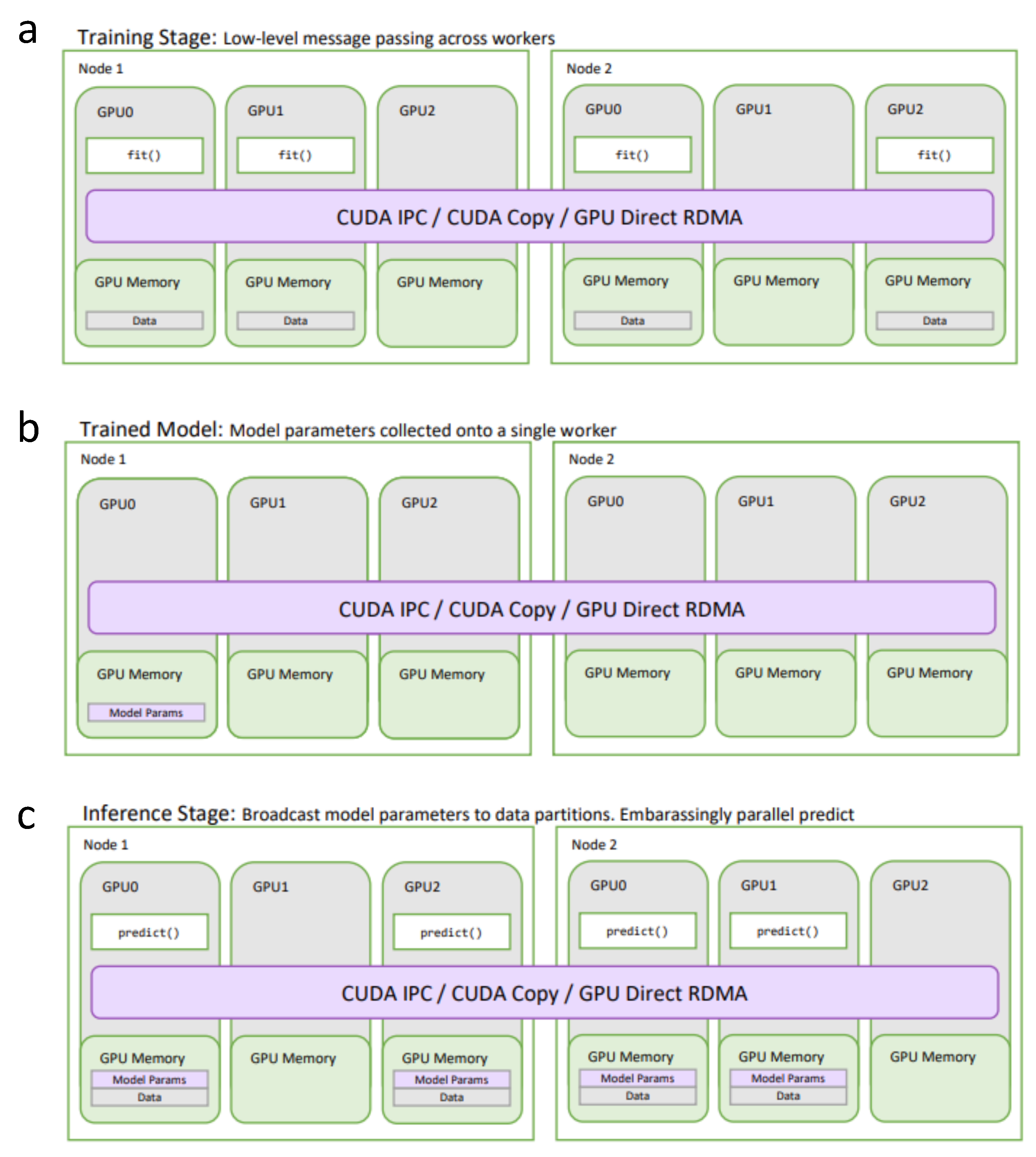

RAPIDS is an open source effort to support and grow the ecosystem of... | Download High-Resolution Scientific Diagram

Information | Free Full-Text | Machine Learning in Python: Main Developments and Technology Trends in Data Science, Machine Learning, and Artificial Intelligence | HTML

GPU parallel computing for machine learning in Python: how to build a parallel computer: Takefuji, Yoshiyasu: 9781521524909: Amazon.com: Books